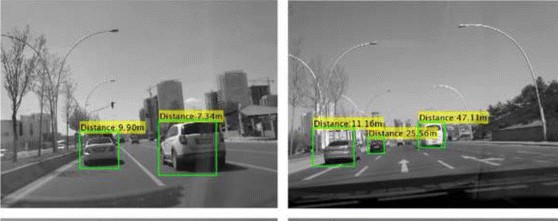

Vehicular Distance Detection

The major task was to detect the vehicles ( In this case CARS ) first & then calculating the distance between them. Vehicle Detection was done by making a Car & Not Car classifier along with a creation of heatmap. Vehicle Distance Detection was done as a part of my exploration with YAD2K ( It is a 90% Keras/10% Tensorflow implementation of YOLO_v2 ).

Skills Used - Keras, Tensorflow, Numpy, h5py, Pillow, Python

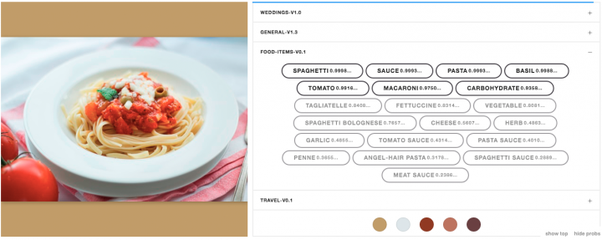

Recipe Generator

The major task was to recommend the ingredients and recipes just by looking at a food image. It was solved into two parts: One neural network was identifying the ingredients that it sees in the dish, while the other was devising a recipe from the list trained on the Food 101 Dataset. It was done as a part of my exploration for the research by Facebook AI team.

Skills Used - PyTorch, Numpy, Scipy, Matplotlib, Torchvision, nltk, Pillow, Tensorflow, Python